Social Media

Facebook tightens policies around self-harm and suicide

Timed with World Suicide Prevention Day, Facebook is tightening its policies around some difficult topics including self-harm, suicide, and eating disorder content after consulting with a series of experts on these topics. It’s also hiring a new Safety Policy Manager to advise on these areas going forward. This person will be specifically tasked with analyzing the impacts of Facebook’s policies and its apps on people’s health and well-being, and will explore new ways to improve support for the Facebook community.

The social network, like others in the space, has to walk a fine line when it comes to self-harm content. On the one hand, allowing people to openly discuss their mental health struggles with family, friends, and other online support groups can be beneficial. But on the other, science indicates that suicide can be contagious, and that clusters and outbreaks are real phenomena. Meanwhile, graphic imagery of self-harm can unintentionally promote the behavior.

With its updated policies, Facebook aims to prevent the spread of more harmful imagery and content.

It changed its policy around self-harm images to no longer allow graphic cutting images which can unintentionally promote or trigger self-harm. These images will not be allowed even if someone is seeking support or expressing themselves to aid their recovery, Facebook says.

The same content will also now be more difficult to find on Instagram through search and Explore.

And Facebook has tightened its policy regarding eating disorder content on its apps to prevent an expanded range of content that could contribute to eating disorders. This includes content that focuses on the depiction of ribs, collar bones, thigh gaps, concave stomach, or protruding spine or scapula, when shared with terms related to eating disorders. It will also ban content that includes instructions for drastic and unhealthy weight loss, when shared with those same sorts of terms.

It will also display a sensitivity screen over healed self-harm cuts going forward to help unintentionally promote self-harm.

Even when it takes content down, Facebook says it will now continue to send resources to people who posted self-harm or eating disorder content.

Facebook will additionally include Orygen’s #chatsafe guidelines to its Safety Center and in resources on Instagram. These guidelines are meant to help those who are responding to suicide-related content posted by others or are looking to express their own thoughts and feelings on the topic.

The changes came about over the course of the year, following Facebook’s consultations with a variety of the experts in the field, across a number of countries including the U.S., Canada, U.K. Australia, Brazil, Bulgaria, India, Mexico, Philippines, and Thailand. Several of the policies were updated prior to today, but Facebook is now publicly announcing the combined lot.

The company says it’s also looking into sharing the public data from its platform on how people talk about suicide with academic researchers by way of the CrowdTangle monitoring tool. Before, this was made available primarily to newsrooms and media publishers

Suicide helplines provide help to those in need. Contact a helpline if you need support yourself or need help supporting a friend. Click here for Facebook’s list of helplines around the world.

-

Business7 days ago

Business7 days agoLangdock raises $3M with General Catalyst to help businesses avoid vendor lock-in with LLMs

-

Entertainment6 days ago

Entertainment6 days agoWhat Robert Durst did: Everything to know ahead of ‘The Jinx: Part 2’

-

Entertainment6 days ago

Entertainment6 days agoThis nova is on the verge of exploding. You could see it any day now.

-

Business6 days ago

Business6 days agoIndia’s election overshadowed by the rise of online misinformation

-

Business5 days ago

Business5 days agoThis camera trades pictures for AI poetry

-

Business6 days ago

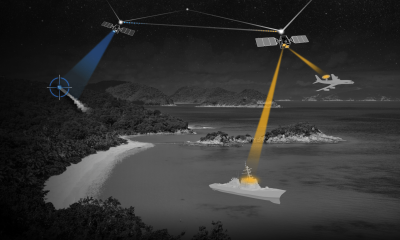

Business6 days agoCesiumAstro claims former exec spilled trade secrets to upstart competitor AnySignal

-

Business4 days ago

Business4 days agoTikTok Shop expands its secondhand luxury fashion offering to the UK

-

Business5 days ago

Business5 days agoBoston Dynamics unveils a new robot, controversy over MKBHD, and layoffs at Tesla