Technology

Your social media photos could be training facial recognition AI without your consent

If your face has ever appeared in a photo on Flickr, it could be currently training facial recognition technology without your permission.

As per a report by NBC News, IBM has been using around one million images from the image-hosting platform to train its facial recognition AI, without the permission of the people in the photos.

In January, IBM revealed its new “Diversity in Faces” dataset with the goal to make facial recognition systems fairer and better at identifying a diverse range of faces — AI algorithms have had difficulty in the past recognising women and people of colour.

Considering the potential uses of facial recognition technology, whether it be for hardcore surveillance, finding missing persons, detecting celebrity stalkers, social media image tagging, or unlocking your phone or house, many people might not want their face used for this type of AI training — particularly if it involves pinpointing people by gender or race.

IBM’s dataset drew upon a huge collection of around 100 million Creative Commons-licensed images, referred to as the YFCC-100M dataset and released by Flickr’s former owner, Yahoo, for research purposes — there are many CC image databases used for academic research into facial recognition, or fun comparison projects.

IBM used approximately one million of these images for their own “training dataset.” According to NBC, which was able to view the collection, these have all been annotated according to various estimates like age and gender, as well as physical details — skin tone, size and shape of facial features, and pose.

But while IBM was using perfectly fine Creative Commons images, the company hadn’t actually informed those whose faces appear in the almost one million images what their actual faces, not just images, were being used for.

Sure, the image subjects may have given permission for a photo of themselves to be uploaded to Flickr and listed under a CC license, but those subjects weren’t given a chance to give consent for their faces to be used to train AI facial recognition systems.

NBC talked to several people whose images had appeared in IBM’s dataset, including a PR executive who has hundreds of images sitting in the collection.

“None of the people I photographed had any idea their images were being used in this way,” Greg Peverill-Conti told the news outlet. “It seems a little sketchy that IBM can use these pictures without saying anything to anybody.”

Flickr co-founder Caterina Fake also revealed IBM was using 14 of her photos.

“IBM says people can opt out, but is making it impossible to do so,” she tweeted.

Want to opt out? It’s not that easy, although IBM confirmed to NBC that anyone who would like their image removed from the dataset is able to request it by emailing a link to the company.

The only problem? The dataset isn’t publicly available, only to researchers, so Flickr users and those featured in their images have no way of really knowing if they’re included.

Luckily, NBC created a handy little tool if you want to check whether you’re included, you just have to drop in your username.

Mashable has reached out to IBM for comment.

-

Business6 days ago

Business6 days agoLangdock raises $3M with General Catalyst to help businesses avoid vendor lock-in with LLMs

-

Entertainment5 days ago

Entertainment5 days agoWhat Robert Durst did: Everything to know ahead of ‘The Jinx: Part 2’

-

Entertainment5 days ago

Entertainment5 days agoThis nova is on the verge of exploding. You could see it any day now.

-

Business5 days ago

Business5 days agoIndia’s election overshadowed by the rise of online misinformation

-

Business4 days ago

Business4 days agoThis camera trades pictures for AI poetry

-

Business5 days ago

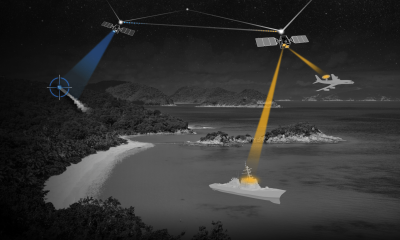

Business5 days agoCesiumAstro claims former exec spilled trade secrets to upstart competitor AnySignal

-

Business7 days ago

Business7 days agoScreen Skinz raises $1.5 million seed to create custom screen protectors

-

Entertainment7 days ago

Entertainment7 days agoDating culture has become selfish. How do we fix it?