Technology

Watch Samsung’s AI turn Mona Lisa into a talking head video

Image: Samsung AI Center, Moscow

Need more convincing that it will soon be impossible to tell whether a video of a person is real or fake? Enter Samsung’s new research, in which a neural network can turn a still image into a disturbingly convincing video.

Researchers at the Samsung AI center in Moscow have achieved this, Motherboard reported Thursday, by training a “deep convolutional network” on a large number of videos showing talking heads, allowing it to identify certain facial features, and then using that knowledge to animate an image.

The results, presented in a paper called “Few-Shot Adversarial Learning of Realistic Neural Talking Head Models,” are not as good as some of the deepfake videos you’ve seen, but to create those, you need a large number of images of the person you’re trying to animate. The advantage of Samsung’s approach is that you can turn a single still image (though the fidelity of the resulting video increases with more images) into a video.

You can see some of the results of this research in the video, below. Using a single still image of Fyodor Dostoevsky, Salvador Dali, Albert Einstein, Marilyn Monroe and even Mona Lisa, the AI was able to create videos of them talking which are realistic enough — at moments — to appear to be actual footage.

None of these videos will fool an expert, or anyone looking close enough. But as we’ve seen in previous research on AI-based generated imagery, the results tend to vastly improve in a matter of years.

The implications of this research are chilling. Armed with this tool, one only needs a single photo of a person (which are today easily obtainable for most people) to create a video of them talking. Add to that a tool that can use short snippets of sample audio material to create convincing, fake voice of a person, and one can get anyone to “say” anything. And with tools like Nvidia’s GAN, one could even create a realistic-looking, fake setting for such a video. As these tools become more powerful and easier to obtain, it will become tougher to tell real videos from fake ones; hopefully, the tools to discern between the two will get more advanced as well.

-

Business6 days ago

Business6 days agoLangdock raises $3M with General Catalyst to help businesses avoid vendor lock-in with LLMs

-

Entertainment6 days ago

Entertainment6 days agoWhat Robert Durst did: Everything to know ahead of ‘The Jinx: Part 2’

-

Entertainment5 days ago

Entertainment5 days agoThis nova is on the verge of exploding. You could see it any day now.

-

Business5 days ago

Business5 days agoIndia’s election overshadowed by the rise of online misinformation

-

Business5 days ago

Business5 days agoThis camera trades pictures for AI poetry

-

Business6 days ago

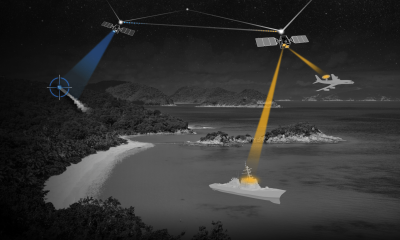

Business6 days agoCesiumAstro claims former exec spilled trade secrets to upstart competitor AnySignal

-

Business7 days ago

Business7 days agoInternet users are getting younger; now the UK is weighing up if AI can help protect them

-

Business4 days ago

Business4 days agoTikTok Shop expands its secondhand luxury fashion offering to the UK