Technology

UK watchdog to hold social media companies to account over harmful content

Social media companies like Facebook and Instagram will be subject to more scrutiny in the UK over harmful content appearing on their platforms, with new powers awarded to a government-appointed regulator.

The UK government has announced plans to appoint media watchdog Ofcom as an online regulator, giving it powers to act swiftly on harmful content on social media.

Ofcom, which currently watches TV and radio organisations like the BBC, will be able to hold tech and social media companies to account if they do not adequately protect users from harmful content, including that associated with terrorism and child abuse.

Platforms involving user-generated content (that includes comments, forums, or video sharing) like Snapchat, Facebook, Twitter, and Instagram, will need to ensure that illegal content is removed swiftly, and to take measures to reduce the possibility of it appearing in the first place.

That being said, the government said it will make sure Ofcom has a clear responsibility to protect users’ rights online, calling out particular things like safeguarding free speech, defending press freedom, and “promoting tech innovation.”

Digital secretary Nicky Morgan and home secretary Priti Patel announced the appointment on Wednesday, coinciding with the government publishing its response to public consultation on the Online Harms White Paper — an outline of the government’s plans to tackle online safety.

“While the internet can be used to connect people and drive innovation, we know it can also be a hiding place for criminals, including paedophiles, to cause immense harm,” said Patel. “It is incumbent on tech firms to balance issues of privacy and technological advances with child protection. That’s why it is right that we have a strong regulator to ensure social media firms fulfil their vital responsibility to vulnerable users.”

“We share the government’s ambition to keep people safe online and welcome that it is minded to appoint Ofcom as the online harms regulator,” said Jonathan Oxley, Ofcom’s interim chief executive in a press statement. “We will work with the government to help ensure that regulation provides effective protection for people online and, if appointed, will consider what voluntary steps can be taken in advance of legislation.”

Regulations will not inhibit people “accessing or posting legal content that some may find offensive,” according to the UK Department for Digital, Culture, Media and Sport. It’s putting more onus on the companies themselves, requiring them to explicitly state what content is “acceptable” on their platforms and enforce these conditions effectively, with full transparency.

The move comes, as the BBC reports, after public calls for social media companies to take more responsibility for harmful content, following the death of Molly Russell, who died by suicide in 2017. Russell’s father held Instagram partly response after finding content related to suicide and self harm on accounts his daughter was following.

The government will set all legislation, but Ofcom will be able to make decisions on holding companies to account without needing to push for new legislation. We’ll get more of an idea of the full enforcement powers Ofcom will be wielding when the government publishes a full consultation response in spring 2020.

The Online Harms white paper was unveiled in April 2019 as a means to crack down on social media companies which don’t stop harmful content. The paper, released by the Department for Culture, Media and Sport, introduced the need for a new independent regulator for social media companies, file hosting sites, and other companies which host content. And now, Ofcom is set to be that regulator.

This also comes amidst massive crackdown on how tech and social media companies use children’s data in the UK, with the announcement of new privacy protection standards in January.

-

Entertainment6 days ago

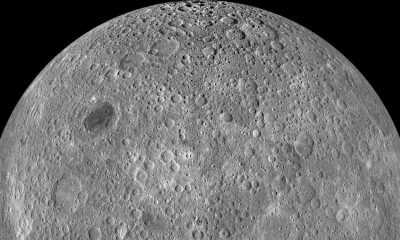

Entertainment6 days agoWhat’s on the far side of the moon? Not darkness.

-

Business7 days ago

Business7 days agoHow Rubrik’s IPO paid off big for Greylock VC Asheem Chandna

-

Business6 days ago

Business6 days agoTikTok faces a ban in the US, Tesla profits drop and healthcare data leaks

-

Business5 days ago

Business5 days agoLondon’s first defense tech hackathon brings Ukraine war closer to the city’s startups

-

Business7 days ago

Business7 days agoPhoto-sharing community EyeEm will license users’ photos to train AI if they don’t delete them

-

Entertainment6 days ago

Entertainment6 days agoHow to watch ‘The Idea of You’: Release date, streaming deals

-

Entertainment5 days ago

Entertainment5 days agoMark Zuckerberg has found a new sense of style. Why?

-

Business5 days ago

Business5 days agoHumanoid robots are learning to fall well