Technology

Twitter will label deepfakes and other ‘manipulated media’

As election season heats up, Twitter revealed its plan for fighting deepfakes and other deceptive video.

The company said that, beginning March 5, it will start to add warning labels to tweets that contain videos with “synthetic and manipulated media,” and that it will remove them entirely when they are likely to “cause serious harm.” The update comes nearly three months after the platform asked users to weigh in on what such a policy should look like.

Notably, Twitter’s policy isn’t limited to just “deepfakes,” typically understood as videos that are manipulated using AI-powered technology. During a call with reporters, Twitter executives repeatedly pointed out that their policy would cover videos that were edited using more basic editing tools as well, such as the infamous Nancy Pelosi video.

“Our approach does not focus on the specific technology used to manipulate or fabricate media,” Twitter’s head of site integrity, Yoel Roth, said. “Whether you’re using advanced machine learning tools, or just slowing down a video using a 99 cent app on your phone, our focus under this policy is to look at the outcome, not how it was achieved.”

Under the new rules, which Twitter described as a “living document” that could change over time, the company says it can take a range of actions, depending on the video and the context in which it’s shared. In some cases, the tweets will be given warning labels that might appear before the tweet is liked or retweeted. And, in a Facebook-like move, the tweet could also be subjected to “reduced visibility.”

The company shared the following graphic to explain how it might distinguish between what type of content might be labeled and what might be removed entirely.

How Twitter plans to apply its rules in order to determine what action to take.

But even with the new guidance, there’s considerable ambiguity. Every scenario Twitter outlines in the graphic above qualifies that an action “may” be taken or that it’s “likely” to happen, leaving the door open for the company to make exceptions.

As for whether or not politicians and public figures will be subject to the same rules, the company said that its rules will cover all such videos, regardless of who has tweeted them.

“If the media is altered or fabricated, regardless of who the individual is, this policy would still apply,” Twitter’s VP of trust and safety, Del Harvey, said. “It’s difficult to comment on hypotheticals because each instance is going to depend on the context surrounding the tweet, what’s happening in the world when it’s tweeted, and so on. But this policy does apply to media across the board.”

-

Entertainment6 days ago

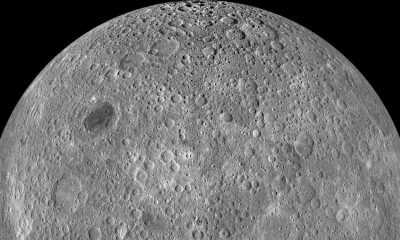

Entertainment6 days agoWhat’s on the far side of the moon? Not darkness.

-

Business7 days ago

Business7 days agoHow Rubrik’s IPO paid off big for Greylock VC Asheem Chandna

-

Business6 days ago

Business6 days agoTikTok faces a ban in the US, Tesla profits drop and healthcare data leaks

-

Business5 days ago

Business5 days agoLondon’s first defense tech hackathon brings Ukraine war closer to the city’s startups

-

Business7 days ago

Business7 days agoPhoto-sharing community EyeEm will license users’ photos to train AI if they don’t delete them

-

Entertainment6 days ago

Entertainment6 days agoHow to watch ‘The Idea of You’: Release date, streaming deals

-

Entertainment5 days ago

Entertainment5 days agoMark Zuckerberg has found a new sense of style. Why?

-

Business5 days ago

Business5 days agoHumanoid robots are learning to fall well