Technology

Snap diversity report debuts new anti-bias engineering projects

Snap engineers are working to root out racial bias from the company’s products, even as improving diverse representation in the company’s workforce is slow going.

Snap, Inc. released its second annual diversity report Thursday, containing mixed results. The company has made modest gains in hiring underrepresented racial and ethnic groups, increasing by .5 percentage points to 13.6 percent at the leadership level.

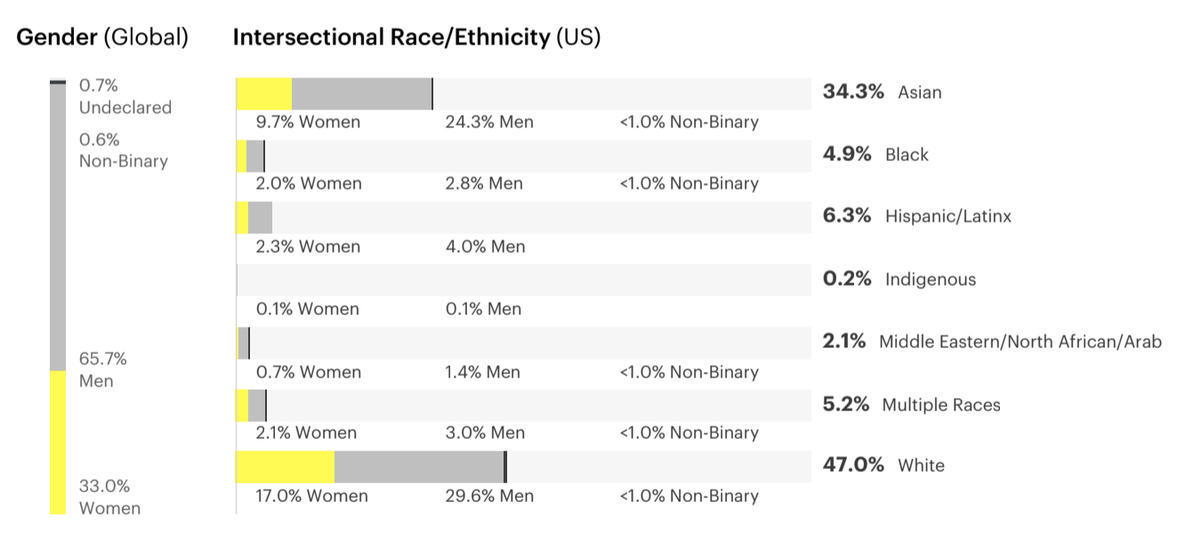

There was notable improvement in hiring Black employees, particularly Black women, (with hiring in the latter group growing from 2 percent to 5 percent of new hires) and elevating women to tech leadership roles. On the other end of the spectrum, retention of Hispanic employees dipped slightly, as did representation of Asian people in leadership roles, to 14.3 percent (which is disproportionately low compared to representation of Asian people across the company as a whole at 34.3 percent).

Overall, Snap’s representation of Black and Hispanic/Latinx employees tracks with the rest of the tech industry, at around 4-6 percent each. Just under half of Snap employees — 47 percent — are white.

Modest improvements and slight losses alike characterize Snap’s year in DEI workforce demographics.

Image: screenshot: Snap, inc.

Snap has collected diversity data internally for years, but released its first ever public diversity report in July 2020. The company’s CEO Evan Spiegel was reportedly hesitant to release the data because he said he feared it would do more harm than good by potentially giving numerical credence to the notion that people of color are underrepresented at tech companies. Ultimately, Snap released the data, which showed it fell in line with most tech company averages.

As with the first report, demographics were not the sole focus of this year’s report. This year, Snap highlighted the work it has done to share the experiences of people of color with the workforce as a whole, empower Employee Resource Groups with broader access to leadership, foster diverse talent with training programs that have led to internships at the company, make diversity, equity, and inclusion (DEI) a performance metric across the company, and more.

It’s also ensuring that its Discover content accurately reflects the demographics of its users by surveying and studying viewers. It has increased representation for people of color and LGBTQ+ individuals in Discover shows to over 50 percent. This initiative on the content team in particular is notable, in part, because Snap came under fire last year after employees shared, and Mashable reported on, past racially biased practices on the content team. Snap subsequently conducted an investigation, and managers involved with the allegations have since left the company.

“Our ambition is to ensure that our Discover content platform, which we intentionally curate, features content that reflects the diversity of Snapchatters and their interests,” the report says.

Engineering equity

As a tech company, Snap has also focused these efforts on the product, not just the people. This has resulted in initiatives around removing unconscious bias and racial insensitivity from coding language, machine learning, and even the mechanics of a camera.

“We are both rewriting our machine learning algorithms to remove unconscious bias and adopting inclusive design principles into the way we develop our products at the front end,” the report says. “We are committed to building a more inclusive camera for Snapchat, one that is accessible to anyone, inclusive of everyone—in terms of age, status, skin tone, body size, ability, and language—and is shaped by diverse perspectives.”

It’s early days for all of these projects, but Snap wanted to share its initial efforts — even if it is somewhat light on detail.

A Snap spokesperson shared with Mashable the origin for the camera inclusivity project. Bertrand Saint-Preux, an engineer on Snap’s camera team who is Black, reflected on his personal experience using the Snap camera, noticing that it did not always accurately represent him. This caused him to dig into the history of the camera, finding that the way cameras calibrate light is built on white skin as the default. Apertures are often still not wide enough today to capture all skin tones.

Saint-Preux led an effort internally to combat the camera’s racially biased historical legacy. Now, Snap is collaborating with experts in film and TV photography and videography to ensure its camera (which, on smartphones, uses physical phone hardware, but runs on Snap software), accurately captures skin tones and facial features for all people. This includes improving the way the front-facing flash captures low light (important for accurately lighting a subject and capturing color and detail). It is also working on photo processing after a photo is taken to make sure its representation is accurate.

A second initiative revolves around machine learning (ML). ML powers a lot of the Snap camera experience, from photo editing to AR effects. However, because artificial intelligence systems like ML reflect the unconscious biases of their makers, they can function in an unequal way in practice.

Part of this is because datasets, such as face photos, are often not racially diverse, so a white face becomes the default for identifying a face. This makes systems like Snap’s face tracking less effective for people of color. This ineffectiveness also comes from the priorities written into algorithms. Snap explains that “If the algorithm has not been optimized for variance — if it is not programmed to be as good at looking for anything that’s not White — then it will fail at seeing darker faces.” In the report, Snap acknowledges that finding better training data has been a challenge.

“While ML is a powerful tool that can help personalize a Snapchatter’s experience, it’s inherently designed to learn and optimize in aggregates,” the report says. “So while the overall product experience may improve per global measures, we may be coming up short for certain populations within our community.”

Snap is trying to “optimize for variance” as it audits its algorithms. It is investigating times when face-tracking algorithms don’t work very well, and creating systems to identify these scenarios, predict when they may occur, and, ultimately, fix them.

A third engineering initiative is to root out racially insensitive language from the actual code that powers Snap. For example, some coding uses terminology like “master” and “slave” to classify things. Recognizing the racial bias in coding language has launched a reckoning in the coding community, and now it’s come to Snap, too. Last year, senior engineer Tammarrian Rogers became Snap’s first Director of Engineering Inclusion to spearhead the project.

It is certainly convenient that Snap is choosing to share these early-stage engineering initiatives in the same breath that it is releasing underwhelming diversity statistics. However, that doesn’t diminish their importance, and the potential they have for raising the DEI bar in the tech industry as a whole.

-

Business6 days ago

Business6 days agoXaira, an AI drug discovery startup, launches with a massive $1B, says it’s ‘ready’ to start developing drugs

-

Business6 days ago

Business6 days agoUK probes Amazon and Microsoft over AI partnerships with Mistral, Anthropic, and Inflection

-

Entertainment5 days ago

Entertainment5 days agoSummer Movie Preview: From ‘Alien’ and ‘Furiosa’ to ‘Deadpool and Wolverine’

-

Business5 days ago

Business5 days agoPetlibro’s new smart refrigerated wet food feeder is what your cat deserves

-

Entertainment4 days ago

Entertainment4 days agoWhat’s on the far side of the moon? Not darkness.

-

Business4 days ago

Business4 days agoHow Rubrik’s IPO paid off big for Greylock VC Asheem Chandna

-

Business5 days ago

Business5 days agoThoma Bravo to take UK cybersecurity company Darktrace private in $5B deal

-

Business5 days ago

Business5 days agoZomato’s quick commerce unit Blinkit eclipses core food business in value, says Goldman Sachs