Technology

Scary deepfake tool lets you put words into someone’s mouth

If you needed more evidence that AI-based deepfakes are incredibly scary, we present to you a new a tool that lets you type in text and generate a video of an actual person saying those exact words.

A group of scientists from Stanford University, the Max Planck Institute for Informatics, Princeton University, and Adobe Research created a tool and presented the research in a paper (via The Verge), titled “Text-based Editing of Talking-head Video.” The paper explains the methods used to “edit talking-head video based on its transcript to produce a realistic output video in which the dialogue of the speaker has been modified.”

And while the techniques used to achieve this are very complex, using the tool is frighteningly simple.

A YouTube video accompanying the research shows several videos of actual people saying actual sentences (yes, apparently we’re at that point in history where everything can be faked). Then a part of the sentence is changed — for example, “napalm” in “I love the smell of napalm in the morning” is exchanged with “french toast” — and you see the same person uttering a different sentence, in a very convincing manner.

Getting this tool to work in such a simple manner requires techniques to automatically annotate a talking head video with “phonemes, visemes, 3D face pose and geometry, reflectance, expression and scene illumination per frame.” When the transcript of the speech in the video is altered, the researchers’ algorithm stitches all the elements back together seamlessly, while the lower half of the face of the person in the video is rendered to match the new text.

On the input side, the tool allows a user to easily add, remove or alter words in a talking head video, or even create entirely new, full sentences. There are limitations — this tool can only be used on talking head videos, and the results vary widely depending on how much of the text is altered or omitted, for example. But the researchers note that their work is just the “first important step” towards “fully text-based editing and synthesis of general audio-visual content,” and suggest several methods for improving their results.

Videos generated by the tool were shown to a group of 138 people; in 59.6% of their responses, the fake videos were mistaken to be real. For comparison, the same group was able to identify the real videos as real 80.6% of the time.

The tool isn’t widely available, and in a blog post, the researches acknowledge the complicated ethical considerations of releasing it. It can be used for valid causes, such as creating better editing tools for movie post production, but it can also be misused. “We acknowledge that bad actors might use such technologies to falsify personal statements and slander prominent individuals,” the post says. The researchers propose several techniques for making such a tool harder to misuse, including watermarking the video. But it’s quite obvious that it’s only a matter of time before these types of tools are widely available, and it’s hard to imagine they’ll solely be used for noble purposes.

-

Business6 days ago

Business6 days agoFormer top SpaceX exec Tom Ochinero sets up new VC firm, filings reveal

-

Business7 days ago

Business7 days agoTesla layoffs hit high performers, some departments slashed, sources say

-

Entertainment7 days ago

Entertainment7 days agoChatGPT vs. Gemini: Which AI chatbot won our 5-round match?

-

Business5 days ago

Business5 days agoConsumer Financial Protection Bureau fines BloomTech for false claims

-

Business4 days ago

Business4 days agoLangdock raises $3M with General Catalyst to help businesses avoid vendor lock-in with LLMs

-

Entertainment3 days ago

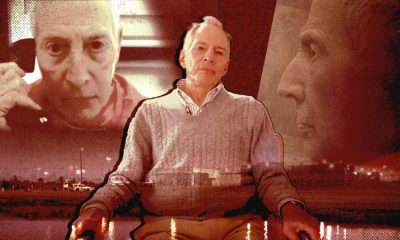

Entertainment3 days agoWhat Robert Durst did: Everything to know ahead of ‘The Jinx: Part 2’

-

Business6 days ago

Business6 days agoKlarna credit card launches in the US as Swedish fintech grows its market presence

-

Entertainment7 days ago

Entertainment7 days agoHow to watch ‘The Sympathizer’: Release date and streaming deals