Technology

more than 400 channels deleted as advertisers flee over child predators

YouTube is in crisis mode yet again.

On Wednesday night, the online video giant announced it had banned more than 400 channels and disabled comments on tens of millions of videos following a growing YouTube controversy concerning child exploitation. However, many major brands, like Disney, AT&T, Nestle, and Fortnite are jumping ship an halting all YouTube advertising in its wake.

Over the weekend, a YouTube video highlighting child predators’ rampant use of the platform had gone viral. YouTuber Matt Watson posted the video walking users through how a simple YouTube search can easily uncover “soft” pedophilia rings on the video service.

Child exploitation is clearly an internet-wide issue, however, Watson’s video explored how YouTube’s recommendation algorithm works to add a unique problem for the site. As shown in Watson’s viral upload, a search for popular YouTube video niches, such as “bikini haul,” leads the platform to recommend similar content to viewers. If a user clicks on a single recommended video featuring a child, the viewer can get sucked into a “wormhole,” as Watson calls it, where YouTube’s recommendation algorithm will proceed to push content to the viewer which strictly features children.

These recommended videos then become inundated with comments from child predators. Often times, these comments directly hyperlink timecodes from within the video. The linked timecodes often forwards a user to a point in the video where the minor may, innocently, be found in compromising positions. On these video pages, these commenters also share their contacts so that they can trade child pornography with other users off of YouTube’s platform.

On top of all of this, Watson pointed out that many of these videos were monetized. Basically, big brands’ advertisements were appearing right next to these comments.

The video quickly went viral on Reddit and viewers quickly started spreading the hashtag #YouTubeWakeUp across social media, urging the company to take action.

UPDATE: @YouTube @YTCreators left a comment and provided an update on what they’ve done to combat horrible people on the site in the last 48 hours.

TLDR: Disabled comments on tens of millions of videos. Terminated over 400 channels. Reported illegal comments to law enforcement. pic.twitter.com/zFHFfkX9FD

— Philip DeFranco (@PhillyD) February 21, 2019

While many popular creators covered the issue, PhillyD received a comment from the official YouTube channel after he posted his video.

“We appreciate you raising awareness of this with your fans, and that you realize all of us at YouTube are working incredibly hard to root out horrible behavior on our platform,” said YouTube in their comment.

“In the last 48 hours, beyond our normal protections we’ve disabled comments on tens of millions of videos,” the statement continued. “We’ve also terminated over 400 channels for the comments they left on videos, and reported illegal comments to law enforcement.”

This isn’t the first time YouTube has been accused of having child exploitation issues. In 2017, a slew of problematic videos were found on the platform, specifically marketed to children — often times directly on the YouTube Kids app. These videos presented popular children’s characters with adult themes, sometimes in sexually explicit fashion.

In response, YouTube overhauled its kids app and put new moderation policies in place.

Advertisers have also boycotted YouTube en mass over scandals on the platform before. The cause for previous ad pullouts have ranged from issues with problems like terroristic content and racist hate speech.

In order to tackle advertisers’ concerns, YouTube has often rolled out new features so brands can control where its ads appear.

Interestingly, AT&T, one of the companies now halting ads over this latest scandal, only ended its 2-year advertising hiatus due to previous YouTube issues just last month.

YouTube’s platform changes don’t always go off without a hitch. The company has consistently made it harder for actual content creators to monetize their channels. In the wake of Logan Paul’s “suicide forest” controversy, the company instituted new requirements for YouTube Partner eligibility. These new policies, along with its latest “duplicative content” measure, have made it much harder for new YouTubers to monetize content. Big creators, like Paul, are often left unscathed by these changes.

In fact, legitimate YouTube creators have already been impacted by YouTube’s latest response to the child predators uncovered on the platform.

Popular Pokémon YouTubers were swept up in the bans over “child abuse.” It’s believed that YouTube’s system to ban these types of accounts saw the term “CP,” which stands for “Combat Points” in Pokémon GO, and mistook it for a popular acronym among child predators for “child pornography.” The Pokémon YouTubers’ accounts have since been restored.

While dealing with child predators is a problem of its own, It’s clear that many of YouTube’s issues stem from its recommendation algorithm. Just recently, the company announced it was working on excluding certain types of conspiracy-related content from its recommended videos.

In the company’s comment to PhillyD, YouTube acknowledges that it still has a ways to go.

“There is more to be done, and we are continuing to grow our team in order to keep people safe.”

-

Business7 days ago

Business7 days agoLangdock raises $3M with General Catalyst to help businesses avoid vendor lock-in with LLMs

-

Entertainment6 days ago

Entertainment6 days agoWhat Robert Durst did: Everything to know ahead of ‘The Jinx: Part 2’

-

Entertainment6 days ago

Entertainment6 days agoThis nova is on the verge of exploding. You could see it any day now.

-

Business6 days ago

Business6 days agoIndia’s election overshadowed by the rise of online misinformation

-

Business6 days ago

Business6 days agoThis camera trades pictures for AI poetry

-

Business6 days ago

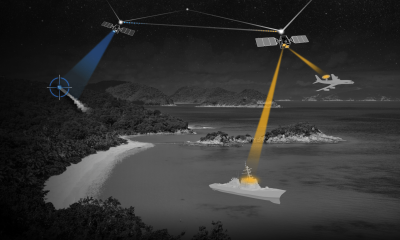

Business6 days agoCesiumAstro claims former exec spilled trade secrets to upstart competitor AnySignal

-

Business4 days ago

Business4 days agoTikTok Shop expands its secondhand luxury fashion offering to the UK

-

Business6 days ago

Business6 days agoBoston Dynamics unveils a new robot, controversy over MKBHD, and layoffs at Tesla