Technology

Facebook, YouTube answer to UK lawmakers for Christchurch video

Image: ANTHONY WALLACE/AFP/Getty Images

Representatives from Facebook, YouTube, and Twitter were grilled and admonished on Tuesday by UK lawmakers angry at the spread of extremist and criminal content.

The UK parliamentary committee hearing was spurred by the spread of the graphic Christchurch shooting video, which the platforms struggled to contain. The shooter, who killed 50 people and injured 50 more at two mosques in New Zealand, livestreamed his crime on Facebook.

Both liberal and conservative politicians slammed the companies for allowing hateful content to proliferate, and in the case of YouTube, actually promote its visibility.

“What on Earth are you doing!? You’re accessories to radicalization, accessories to crimes,” MP Stephen Doughty said, according to BuzzFeed.

“You are making these crimes possible, you are facilitating these crimes,” chairwoman Yvette Cooper said. “Surely that is a serious issue.”

Facebook’s Neil Potts said that he could not rule out that there were still versions of the Chirstchurch shooting on the platform. And YouTube’s director of public policy, Marco Pancini, acknowledged that the platform’s recommendation algorithms were driving people towards more extremist content — even if that’s not what they “intended.”

He says that Facebook’s automation can struggle to detect banned content if it is deliberately manipulated, edited and changed to “subvert the system”

— Matthew Thompson (@mattuthompson) April 24, 2019

Stuart Macdonald MP: “If you’re directing innocent users towards extremist, hateful content…. I can’t really see an excuse for why these algorithms are staying in place

Pancini: “It’s a very good question Mr McDonald”

— Mark Di Stefano ?? (@MarkDiStef) April 24, 2019

Chairwoman Cooper was particularly upset after Facebook said it doesn’t report all crimes to the police. Potts said that Facebook reports crimes when there is a threat to life, and assessed crimes committed on the platform on a “case by case basis.” Twitter and YouTube said they had similar policies.

“There are different scales of crimes,” Potts said. To which Cooper responded. “A crime is a crime… who are you to decide what’s a crime that should be reported, and what’s a crime that shouldn’t be reported?”

“You are making these crimes possible, you are facilitating these crimes.”

MPs took it upon themselves to test how YouTube’s algorithm promotes extremist content. Prior to the hearing, they searched terms like “British news,” and in each case were directed to far-right, inflammatory content by the recommendation engine.

“You are maybe being gamed by extremists, you are effectively providing a platform for extremists, you are enabling extremism on your platforms,” Cooper said. “Yet you are continuing to provide platforms for this extremism, you are continuing to show that you are not keeping up with it, and frankly, in the case of YouTube, you are continuing to promote it. To promote radicalization that has huge damaging consequences to families’ lives and to communities right across the country.”

In addition to removing the original livestreamed video, Facebook said it removed 1.5 million instances of the video, with 1.2 million of those videos blocked at upload within 24 hours of the attack.

The Christchurch video showed how extremists exploit social platforms to spread their message and radicalize users. Facebook, YouTube, and Twitter are all working to increase both automated and human content moderation, building new tools and hiring thousands of employees. But lawmakers asserted that these are bandaids on systemic problems, and extremists are using the services exactly as they were meant to be used: to spread and share content, ignite passions, and give everyone a platform.

-

Business6 days ago

Business6 days agoLangdock raises $3M with General Catalyst to help businesses avoid vendor lock-in with LLMs

-

Entertainment5 days ago

Entertainment5 days agoWhat Robert Durst did: Everything to know ahead of ‘The Jinx: Part 2’

-

Entertainment5 days ago

Entertainment5 days agoThis nova is on the verge of exploding. You could see it any day now.

-

Business5 days ago

Business5 days agoIndia’s election overshadowed by the rise of online misinformation

-

Business4 days ago

Business4 days agoThis camera trades pictures for AI poetry

-

Business5 days ago

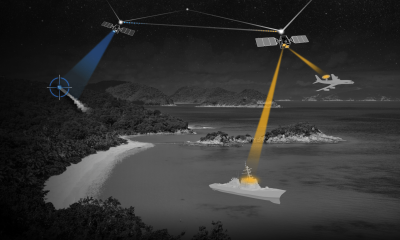

Business5 days agoCesiumAstro claims former exec spilled trade secrets to upstart competitor AnySignal

-

Business7 days ago

Business7 days agoScreen Skinz raises $1.5 million seed to create custom screen protectors

-

Entertainment7 days ago

Entertainment7 days agoDating culture has become selfish. How do we fix it?